The biggest fears that humans have about artificial intelligence

In this article, we mention 3 main concerns about artificial intelligence that technology experts say should be thought about.

Late last year, the former CEO of Google likened AI to nuclear war to show that there are too many concerns about the technology. This reason has caused the countries of the world to seek the legalization of artificial intelligence by passing certain laws and restrictions.

With this introduction, in the following article, we will mention 3 main concerns about artificial intelligence that technology experts say should be thought about.

Empowering artificial intelligence

One of the most common concerns about artificial intelligence is that the technology could get out of control of its creator.

Artificial General Intelligence (AGI) refers to artificial intelligence that is as intelligent as, or even smarter than, humans in performing a wide range of tasks. Current AI models do not have sentience but are designed to simulate humans. For example, ChatGPT is designed in such a way that users feel like chatting with a human.

Although experts have different opinions on how to define AGI, they agree that this technology poses potential risks to humanity and should be further analyzed.

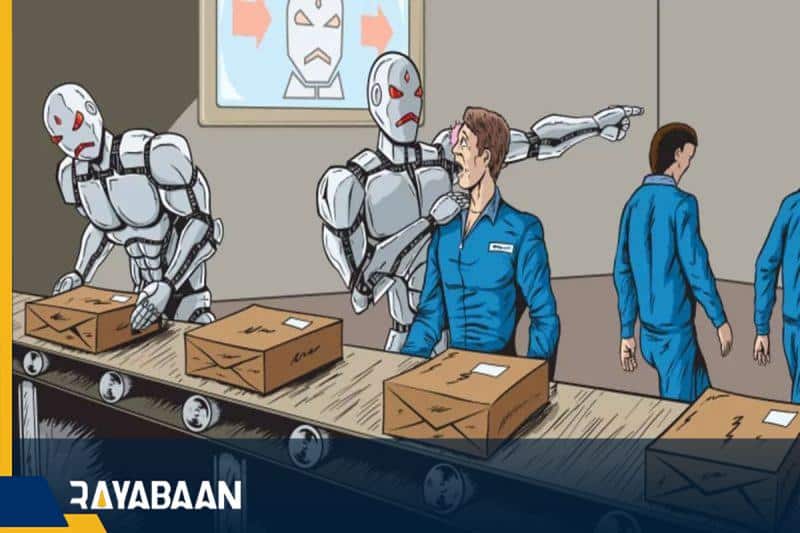

Widespread unemployment of employees

Artificial intelligence also threatens many jobs and will cause widespread unemployment of many employees in various sectors.

In this regard, Abhishek Gupta, founder of the Montreal Institute for the Ethics of Artificial Intelligence, says that the loss of jobs by artificial intelligence is the “most real, the most important and perhaps the most pressing” threat that there is to this technology.

CEOs of companies have also announced their plans to use artificial intelligence. For example, IBM CEO Arvind Krishna recently announced that the company has reduced hiring for roles that may be replaced by artificial intelligence in the future.

Biases of artificial intelligence

If artificial intelligence systems are used more widely to aid social decision-making, systematic bias could become a serious risk, experts say.

So far, there have been several examples of bias in generative AI systems, including early versions of ChatGPT, where a tool built by OpenAI gave strange and inappropriate answers to users.

Noting this, Gupta explains that if there are undetected biases in the models that are used to make real decisions, it can have serious consequences.